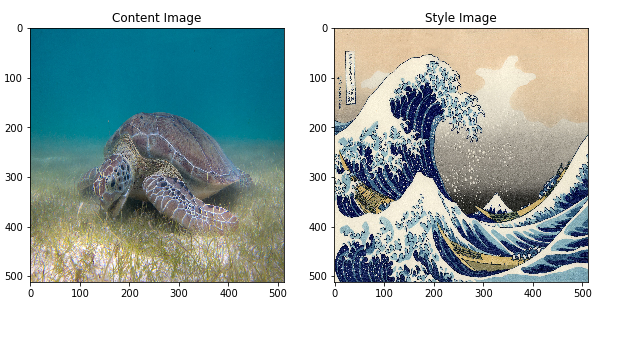

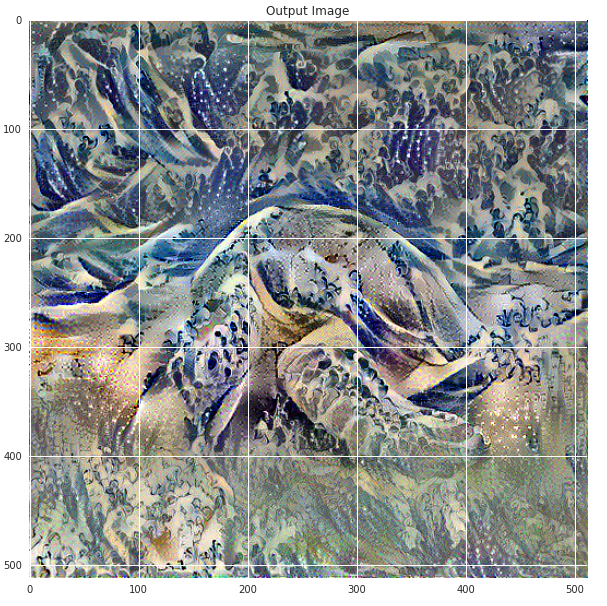

Neural Style Transfer

What it can do?

How?

Given image_style, image_content.

Define distance functions: style_distance(img1, img2), content_distance(img1, img2)

content_distance: Euclidean distance between the intermediate representations of 2 images.

style_distance: A little bit complicated. see raw code:

1 | def gram_matrix(input_tensor): |

gram means correlation between different channels in intermediate layers.

New explanation:

我们从style transfer中使用的Gram matrix出发,试图解释为什么Gram matrix可以代表一个图片的style这个问题。这是我看完style transfer的paper后感觉最为迷惑的一点。一个偶然的机会,我们发现这个匹配两张图的Gram matrix,其实数学上严格等价于极小化这两张图deep activation的2nd poly kernel的MMD距离。其中,MMD距离是用来通过从两个分布中sample的样本来衡量两个分布之间的差异的一种度量。所以本质上,style transfer这个paper做的事情就是将生成图片的deep activation分布和style image的分布进行匹配。这其实可以认为是一个domain adaptation的问题。所以很自然我们可以使用类似于adaBN的想法去做这件事情。这后续有一系列的工作拓展了这个想法,包括adaIN[3]以及若干基于GAN去做style transfer的工作。

Li, Yanghao, Naiyan Wang, Jiaying Liu, and Xiaodi Hou. “Demystifying neural style transfer.” arXiv preprint arXiv:1701.01036 (2017)